AI has always felt like two worlds that don’t really talk to each other. On one side we’ve got deep learning, the raw pattern recognizers that power everything from image recognition to ChatGPT. On the other side we’ve got symbolic AI, the old-school logic-and-rules-based systems that are brilliant at reasoning but terrible at learning from messy real-world data.

For decades, these two camps almost existed in silos. One side said, “Neural nets are the future.” The other shot back, “Without logic, AI will never truly think.” And honestly? Both had a point

This is exactly where Neuro-Symbolic AI walks in — not as a compromise, but as a bridge.

So, what’s Neuro-Symbolic AI?

Think of it as a hybrid brain.

- The neural part gives the system flexibility, it can look at raw pixels, sounds, or text and figure out patterns.

- The symbolic part gives it structure, the ability to reason, apply logic, follow rules, and explain itself.

Put simply as deep learning gives intuition, symbolic reasoning gives intelligence. Together, they aim to make AI not just powerful, but also trustworthy.

Why should we even care?

Because the AI we use today is powerful but fragile. Sure, GPT-5 can generate code, essays, etc. and MidJourney can create art, but if you ask them to reason through multiple steps or follow strict rules, they often stumble. Meanwhile, a purely symbolic AI can solve logic puzzles but will freeze the moment you throw in a blurry real-world photo.

The magic of Neuro-Symbolic AI is that it can, in theory, do both. For example:

- A vision system that not only sees objects in an image but also understands the relationships between them (“the cup is on the table, not floating in midair”).

- A medical AI that doesn’t just predict “this scan looks cancerous” but also explains why in terms of medical knowledge graphs.

Why It Matters for the Future of AI

I personally feel this is where AI’s future is headed. Today’s deep learning models are insanely good at brute force pattern-matching, but they still make silly mistakes. Neuro-Symbolic approaches could fix some of the biggest pain points:

- Data Efficiency: Less dependency on giant datasets.

- Explainability: Decisions you can trace, not just black-box outputs.

- Common Sense: Incorporating reasoning we humans take for granted.

It’s like moving AI from being a “savvy parrot” to being an actual thinker.

Real-World Applications

This isn’t just theory anymore. Neuro-Symbolic approaches are popping up in:

- Healthcare → combining patient data with medical ontologies for better diagnosis.

- Autonomous systems → self-driving cars that follow traffic rules, not just probabilities.

- Legal AI → parsing contracts where logic is as important as natural language.

- Robotics → machines that can actually reason about tasks rather than just mimicking movement.

Deep Research Corner

Now, let’s get into the nerdy but fascinating side! because this field is still very much in nascent stage i.e: in research labs and experimental stages.

- DARPA’s Explainable AI (XAI) initiative pushed neuro-symbolic methods to make AI interpretable. Instead of a “black box” neural net, these hybrids can actually trace back why they reached a conclusion.

- Logic Tensor Networks (LTNs): These extend neural nets to reason with logic-based constraints. Imagine training a neural model, but every prediction has to “respect the rules of physics” or domain knowledge.

- Neuro-Symbolic Concept Learner (NS-CL): Developed at MIT, this system learns visual concepts (like shapes, colors, and spatial relations) and then reasons over them symbolically. It’s basically giving an AI both perception and reasoning.

- Open Challenges:

- Scalability → Symbolic reasoning is powerful, but it struggles with massive, noisy datasets.

- Integration → Seamlessly marrying neural and symbolic methods is hard. Models either lean too much on one side or break when scaled.

- Benchmarks → Unlike pure ML, where we’ve got ImageNet and GLUE, neuro-symbolic AI lacks widely accepted benchmarks. Researchers are still figuring out how to measure “reasoning ability” properly.

- Recent Papers & Directions:

- “Neuro-Symbolic AI: The 3rd Wave of AI” (DARPA’s framing of the field).

- Work from Stanford, MIT, and IBM Research focusing on hybrid architectures.

- Use of knowledge graphs + transformers — where symbolic logic grounds large language models.

This is the kind of stuff where academia and industry are both actively experimenting, and the breakthroughs here could shape the next era of AI.

The Future

We’re still early in this journey. Right now, Neuro-Symbolic AI feels like a startup idea with huge promise but lots of unanswered questions. Yet, if it works, we’re talking about AI that can see, think, and explain like a multi model AI not just predict.

To me that’s the real dream, moving from AI as a clever parrot to AI as a real problem solver. Not replacing human intelligence, but augmenting it with something that’s both data-driven and logically sound.

Maybe that’s when AI will stop being a “tool” and start becoming a real partner in thought (already it is 🤫).

References:

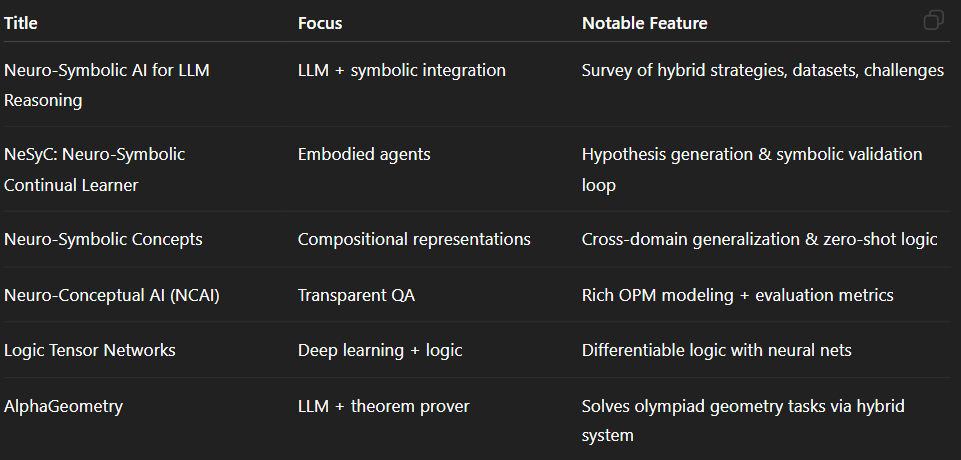

- Neuro-Symbolic Artificial Intelligence: Towards Improving the Reasoning Abilities of Large Language Models (arXiv, Aug 19 2025)

- Neuro-Symbolic Concepts (arXiv, May 2025)

- NeSyC: A Neuro-Symbolic Continual Learner For Complex Embodied Tasks In Open Domains (arXiv, Mar 2025)

- Neuro-Conceptual Artificial Intelligence: Integrating OPM with Deep Learning to Enhance Question Answering Quality (arXiv, Feb 2025)